4 Mistakes to Avoid as a CIO in 2020

As CIOs are finalizing their strategic initiatives for 2020 that invariably involve moving to the cloud, modernizing custom applications and investing in data science initiatives, here is a list of missteps to avoid during that journey.

1. Don’t move to the Cloud without a clear plan.

Cloud migrations can be disappointing

Cloud migrations can be disappointing

As a CIO, you might feel that you are missing the boat if you don’t have plans to move to the Cloud. After all, Cloud is the wave of the future that promises businesses to bring their applications online faster without worrying about the capital expenditure of building data centers. By moving to the Cloud, your organization would also be able to elastically adjust the computing resources they consumed — both on-demand and independently — because of cloud architectures that allowed the separation of storage and compute. It’s a win-win, right?. Not quite, and it depends on the objective that you are trying to achieve by moving your infrastructure to the Cloud.

First, realize that once you move to the Cloud, the meter is always running. Companies that rushed to the Cloud finished their first phase of projects and realized that their operating expenses increased because the savings in human operators were surpassed by the cost of the Cloud compute resources for applications that are usually online. The resources that used to be capitalized when deployed on-premises now hit the P&L in Cloud. And typically the numbers are much higher.

This brings us to the goal that your organization is trying to achieve by moving to the Cloud. If your objective is to enrich your mission-critical applications by incorporating new data sources such as sensors, IoT devices, and social media or injecting artificial intelligence and machine learning into them, merely moving to the Cloud won’t do the trick. This is due to the fact that the burden of integrating various technologies to build a unified platform continues to rest on the shoulders of the IT. In this scenario, the effort required to build an integrated scale-out infrastructure that can leverage diverse data sources and make intelligent decisions using machine learning in the Cloud is not markedly different from building it on-premises. In other words, simply migrating your infrastructure to the Cloud won’t make your legacy applications agile, scalable, and intelligent.

2. Don’t rewrite your legacy applications from scratch.

Rewriting applications is unnecessarily destructive

You are under pressure from the business to modernize some of its mission-critical applications. These may be applications for underwriting, pre-authorization, fraud detection, prevention, know-your-customer / next-best-action. These applications that were once the crown jewels of insurance companies have now become “legacy.” They have become slow because the volume of data that they are required to process increased tremendously. Also, they were never designed to operate on the diverse and unstructured data that is becoming the norm, including data generated from sensors, IoT devices, and social media. Most importantly, the volume and pace of online transactions require these applications to be smart and take intelligent action in real-time without human intervention.

It’s no wonder that given the requirements to be agile, scalable, and smart from Business, you think you have no other choice but to rewrite these applications entirely on a modern platform. And if you were to take that course of action, you would be partially correct. Yes, you do have to re-platform these applications on a platform that can scale-out as the volume of data grows, is capable of storing and analyzing diverse data, and can inject artificial intelligence into the application, but you don’t have to rewrite the application itself. An apt analogy in this case are old Victorian houses in San Francisco that represent the same value for their owners that legacy applications do for the enterprises. In order to safeguard these houses from earthquakes, they have to be retrofitted based on the latest architectural techniques that involves lifting the house and bolting it to the foundation. By doing this, the civil engineering and construction company preserves the architectural look and history of the house while protecting it from earthquake damage.

A database migration can be as effective as an earthquake retrofit

In the same way, by keeping the application intact and placing it on modern foundation, you would preserve the familiar interface and the business logic that is the secret sauce of your company and at the same time accommodate the volume and variety of big data as well as make the application intelligent through artificial intelligence and machine learning. By taking this approach, you would also save your organization from embarking on an expensive, lengthy, and risky project.

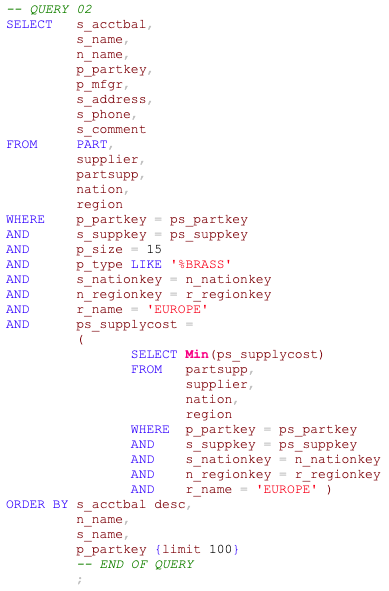

3. Don’t abandon SQL applications in favor of the NoSQL database.

The power of SQL is clear as NoSQL systems rush to put in SQL layers

A number of CIOs have considered building their next-generation operational applications using a NoSQL database such as HBase, Cassandra, Dynamo, and MongoDB. The primary justification for this approach is the amount, diversity, and velocity of data that modern applications have to manage. Traditional SQL databases such as DB2, Oracle, SQL Server, Postgres, MySQL, and others struggle in environments where data is generated by web applications, IoT devices, and sensors. These databases do not provide a practical way to scale or store semi-structured or unstructured data.

However, you should realize that when replacing a back-end SQL database with a NoSQL database to modernize an application, you could incur significant risk and expansion of the project scope. A lack of full SQL support in NoSQL databases necessitates a large amount of application code to be rewritten. Additionally, finding developers with the required NoSQL expertise is significantly harder than the vast numbers of developers already fluent in SQL. Another factor that increases the riskiness of re-platforming on a NoSQL database is that the data model often needs to be redesigned entirely. The modified data model can result in significant data denormalization and duplication that requires companies to write extensive new code to ensure that the duplicated data remains consistent across all of its definitions. Finally, the performance of NoSQL databases may not be a slam dunk against legacy databases. NoSQL systems typically excel at short-running operational queries, but their performance on analytical queries is often poor and not up to par to meet the application’s needs.

Due to the above considerations, if you are thinking about re-platforming a legacy application on a NoSQL database, instead consider a number of scale-out, distributed SQL platforms that are now available in the market. These platforms offer the benefits of NoSQL databases without accepting the problems discussed above.

4. Don’t relegate data science to a backroom activity.

Break the silos on projects and include the business

Data science is the domain of mathematicians and statisticians who build sophisticated algorithms that mere mortals barely understand. Data scientists currently spend a lot of time waiting for data engineers to wrangle the data from a number of internal and external data sources. Then the data science team undergoes a rigorous phase of experimentation creating feature sets that might contain predictive signal and applying analytics to those features. This training and testing workflow is tedious and complicated and requires tracking applications to help data scientists organize and audit their experiments. Once the model is built and trained, it is handed over to a team of application developers to inject into an application and put it into production. Therein lies the rub that organizations currently have a “data science team”, a “data engineering team” and an “application development team” but each team in itself is ill-equipped to get the job done starting from defining the business requirements to deploying the application into production. Data science, data engineering, and application development teams simply do not have enough deep knowledge about the business. Data science is a team sport that needs representation from data scientists, data engineers, and application developers to work side by side with people who have an understanding of the business processes to build an intelligent application that will deliver tangible business outcomes.

If operationalizing artificial intelligence and machine learning as part of your mission-critical applications is high on your agenda then it is imperative that you break down organizational silos and build multi-disciplinary teams comprising of data engineers, application developers, data scientists, and subject-matter experts to build applications that will move the needle for the Business.

To learn more about how to transform your business in 2020, download our whitepaper on application modernization and the critical role is plays in digital transformation.